- AI with Kyle

- Posts

- Prompt Playbook: Building What People Want PART 3

Prompt Playbook: Building What People Want PART 3

Prompt Playbook: Building What People Want

I’m streaming AI news and updates — live Monday to Friday — across all social platforms. Subscribe to YouTube for the best experience.

Hey Prompt Entrepreneur,

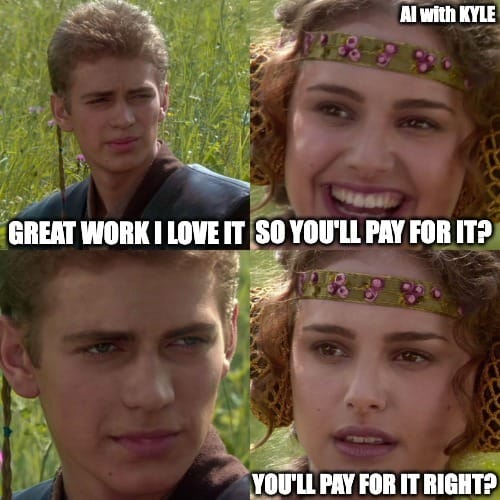

"So what do you think?"

"Yeah, it's good. Nice work."

"Anything you'd change?"

"Not really, looks fine to me."

"Would you use this?"

"Yeah, probably."

You hang up the call feeling... empty. I mean, they said good things, but you've learned absolutely nothing useful. No insights about what confused them, what they actually did, or how you could improve it.

Basically a waste of time. But this is how most people collect feedback. They ask vague questions and get vague answers. Then they wonder why their product still doesn't work properly for real users.

Worse: they’ll protest “I talked to customers!” as a defence. And they did. Technically. They just didn’t do it properly.

Your beta testers are willing to help, but you need to make it easy for them to give you the specific insights you actually need. This comes from asking the right questions.

Let’s get started:

Summary

The right questions

From "it's nice" to actionable insights

Simple external tools

Specific questions that work

Making it easy for testers to help

Why Systematic Feedback Matters

First up we’ve got to systematise this. Random "what do you think?" questions get you polite responses, not useful insights.

Your beta testers want to help. They wouldn’t have volunteered otherwise. But they don't know what kind of feedback you need. They'll default to being nice rather than being helpful. So we need to help them help you, if that makes sense!

You need structured ways to capture the specific information that will actually improve your product:

Usage patterns: What did they actually do? Not what they said they'd do, but what they actually clicked and tried.

Confusion points: Where did they get stuck? What wasn't obvious? What made them pause and think?

Language they use: How do they describe the problem? What words do they use? This becomes your marketing copy later.

Missing expectations: What did they expect to find but didn't? What felt incomplete?

Workflow fit: How does this fit into their actual process? What comes before and after using your tool?

The goal isn't to hear "good job." It's to discover exactly where your assumptions were wrong. Don’t worry, I’ll give you a prompt to construct all of this. First up let’s chat mechanics of how we collect..

Collection Methods

There’s a spectrum of different ways to get feedback. All are good but some are better and richer. One thing I’d say for now is that whilst it’s very nice to have built in feedback and analytics inside your tool that’s adding too much complexity for now. That’ll be super valuable but first we need to make sure our basic business idea is sound - we can do that with more basic methods.

So keep this external to your product for now. Don't try to build feedback systems into your app - use simple tools that already work. Here are a few ranging (roughly!) from easiest to most valuable.

Google Forms (Easiest)

Create a simple form with 5-7 specific questions. Send them the link after they've tried your product. Free, easy to set up, organises responses automatically. Make it anonymous if you think that’ll help them be more truthful.

Voice Notes (Underrated)

Ask them to record a quick voice note on their phone while using your product. "Just talk through what you're thinking as you try this." Often more honest than written feedback because people have less time to structure their thoughts. Stream of consciousness is much more useful.

Screen Recording

A step up is asking them to record their screen while using it. Shows you exactly where they get confused. Tools like Loom make this dead simple. But this is still adding an additional layer of difficulty on their side - remember we want to keep this as frictionless as humanly possible!

Quick Calls (Most Valuable)

Nothing beats a 10-minute call where you can ask follow-up questions. This is the holy grail. But also requires you to step out of your comfort zone and it requires them to carve out time. Which is a pain. So…whilst it’s a very powerful method it’s also more difficult for both you and the tester.

The key: make it as easy as possible for them to give you specific, useful information.

For now I want you to choose one of these methods and use it alongside the prompt below. Use this below your prior work for best results as always as there will be additional context.

Your AI Prompt for Today

I need to create a feedback collection system for beta testers of my product. Here's my product information: [DESCRIBE YOUR PRODUCT AND MAIN FEATURES]

I want to use [CHOOSE YOUR METHOD: Google Forms / Voice notes / Screen recordings / Quick calls] as my primary feedback collection method.

I want to collect these specific types of feedback:

- Usage patterns: What they actually did, not what they said they'd do

- Confusion points: Where they got stuck or weren't sure what to do next

- Language they use: How they describe the problem in their own words

- Missing expectations: What they expected to see that wasn't there

- Workflow fit: How this fits into their actual process

- Specific improvements: What they'd change to make it more useful

Please help me create:

1. Feedback Collection Setup:

- If Google Forms: 5-7 specific questions that get actionable insights

- If Voice notes: Script for what to ask them to record

- If Screen recordings: Instructions for what to capture

- If Calls: Question list for 10-15 minute conversations

2. Specific Questions Based on My Product:

- Questions about usage patterns specific to my features

- Questions about confusion points relevant to my interface

- Questions about missing expectations for my type of tool

- Questions about workflow integration for my target users

3. Follow-up Sequence:

- When to send the feedback request (immediately after use? next day?)

- How many follow-ups to send if they don't respond

- What to do with non-responders

4. Organisation System:

- How to categorise and track the feedback I receive

- What patterns to look for across multiple responses

- How to prioritise which feedback to act on first

Make everything specific to my product and collection method. I want actionable insights, not generic compliments.This will combine information about your product alongside your chosen feedback collection method and prep up all the materials and a brief for you. I’ve also put in some extra stuff about follow up and how to track and organise feedback - all you need to do is implement.

Types of Feedback to Collect

This will get you started but it’s useful (especially if you are doing calls!) to know what you are looking out for. Focus on gathering information that will actually change how you build:

What they actually did: "Walk me through exactly what you clicked and in what order."

Where they hesitated: "Was there any point where you weren't sure what to do next?"

How they describe it: "In your own words, what problem does this solve?"

What they expected: "Was there anything you expected to see that wasn't there?"

How it fits their process: "Where would this fit into your normal workflow?"

What confused them: "What part felt unclear or confusing?"

What they'd change: "If you could change one thing to make this more useful, what would it be?"

Notice how these are all specific, actionable questions rather than general "what do you think?" prompts. These sort of questions will get you the actionable info you need. These are just examples to model. The prompt will also help you come up with more.

Your Deliverable Today

Set up your feedback collection system:

Choose your primary collection method (form, screen record, calls, etc.)

Use the AI prompt to create your specific questions and setup

Create the actual tool (Google Form, call script, etc.)

Test it with a friend to make sure it's clear and easy

Have it ready to send to beta testers after they've used your product

Don't wait for perfect. Get a basic system working that you can improve as you learn what questions work best. We can (and will) add more sophisticated systems later, directly into your product. But right now keep moving forward.

Build in Public Content

Share your approach:

"Day 23 of AI Summer Camp: Setting up feedback collection for beta testers.

Moving beyond 'what do you think?' to specific questions that get actionable insights.

Using [your chosen method] to capture usage patterns, confusion points, and missing expectations.

The goal: learn exactly where my assumptions were wrong so I can fix them.

How do you collect useful feedback from early users? Any tips?"

What's Next?

Tomorrow we dive into being obsessively helpful to your beta testers. Because collecting feedback is only half the job - you also need to support them, watch them use your product live, and have real conversations about their experience. You'll learn how to schedule calls, conduct live screen shares, and be genuinely helpful while gathering the insights you need.

Keep Prompting,

Kyle

When you are ready

AI Entrepreneurship programmes to get you started in AI:

NEW: AI Entrepreneurs Group Chat

Stay at the cutting edge of the AI conversation → Join Chat

90+ AI Business Courses

✓ Instantly unlock 90+ AI Business courses ✓ Get FUTURE courses for Free ✓ Kyle’s personal Prompt Library ✓ AI Business Starter Pack Course ✓ AI Niche Navigator Course → Get Library

(Flagship Programme) AI Workshop Kit

Deliver AI Workshops and Presentations to Businesses with my Field Tested AI Workshop Kit → Learn More

AI Authority Accelerator

Do you want to become THE trusted AI Voice in your industry in 30-days? → Learn More

AI Automation Accelerator

Do you want to build your first AI Automation product in just 2-weeks? → Learn More

Anything else? Hit reply to this email and let’s chat.

If you feel this — learning how to use AI in entrepreneurship and work — is not for you → Unsubscribe here.